Now, can we fix flashlights in games such that we don’t get a well defined circle of lit area surrounded by completely a black environment? Light doesn’t work that way, it bounces and scatters, meaning that a room with a light in it should almost never be completely dark. I always end up ignoring the “adjust the gamma until some wiggle is just visible” setup pages and just blow the gamma out until I can actually see a reasonable amount in the dark areas.

Yes, really dark places should be really dark. But, once you add a light to the situation, they should be a lot less dark.

You just described ray tracing. The problem is, it’s incredibly computationally expensive. That’s why DLSS and FSR were created to try and make up for the slow framerates.

I’ve seen an awesome “kludge” method where, instead of simulating billions of photons bouncing in billions of directions off every surface in the entire world, they are taking extremely low resolution cube map snapshots from the perspective of surfaces on a “one per square(area)” basis once every couple frames and blending between them over distance to inform the diffuse lighting of a scene as if it were ambient light mapping rather than direct light. Which is cool because not only can it represent the brightness of emissive textures, but it also makes it less necessary to manually fill scenes with manually placed key lights, fill lights, and backlights.

Light probes, but they don’t update well, because you have to render the world from their point of view frequently, so they’re not suited for dynamic environments

They don’t need to update well; they’re a compromise to achieve slightly more reactive lighting than ‘baked’ ambient lights. Perhaps one could describe it as ‘parbaked’. Only the ones directly affected by changes of scene conditions need to be updated, and some tentative precalculations for “likely” changes can be tackled in advance while pre-established probes contribute no additional process load because they aren’t being updated unless, as previously stated, something acts on them. IF direct light changes and “sticks” long enough to affect the probes, any perceived ‘lag’ in the light changes will be glossed over by the player’s brain as “oh, my characters’ eyes are adjusting, neat how they accommodated for that.”–even though it’s not actually intentional but rather a drawback of the technology’s limitations.

I am not educated enough to understand this comment

That’s exactly the sort of thing his work improved. He figured out that graphics hardware assumed all lighting intensities were linear when in fact it scaled dramatically as the RGB value increased.

Example: Red value is 128 out of 255 should be 50% of the maximum brightness, that’s what the graphics cards and likely the programmers assumed, but the actual output was 22% brightness.

So you would have areas that were extremely bright immediately cut off into areas that were extremely dark.

deleted by creator

“It’s a bit technical,” begins Birdwell, "but the simple version is that graphics cards at the time always stored RGB textures and even displayed everything as non linear intensities, meaning that an 8 bit RGB value of 128 encodes a pixel that’s about 22% as bright as a value of 255, but the graphics hardware was doing lighting calculations as though everything was linear.

“The net result was that lighting always looked off. If you were trying to shade something that was curved, the dimming due to the surface angle aiming away from the light source would get darker way too quickly. Just like the example above, something that was supposed to end up looking 50% as bright as full intensity ended up looking only 22% as bright on the display. It looked very unnatural, instead of a nice curve everything was shaded way too extreme, rounded shapes looked oddly exaggerated and there wasn’t any way to get things to work in the general case.”

The guy was a fine arts major too. Bunch of stem majors couldn’t figure it out for themselves.

As a person who tends to come from the technical side, this doesn’t surprise me. Without a deep understanding of the subject, how would you come up with an accurate formula or algorithm to meet your needs? And with lighting in a physical space, that would be an arts major, not a math or computing major.

Most of my best work (which I’ll grant is limited) came from working with experts in their field and producing systems that matched their perspectives. I don’t think this diminishes his accomplishments, bit rather emphasizes the importance of seeking out those experts and gaining an understanding of the subject matter you’re working with to produce better results.

Interesting read.

Now can somebody please fix the horrible volume sliders on everything.

Go on

I’m impressed by how well my Steam Deck plays even unsupported games like Estival Versus.

I just wish they bundled it with a browser. Any browser even Internet Explorer would have been better than an “Install Firefox” icon that takes me to a broken store. I had to search for and enter cryptic commands to get started. I’m familiar with Debian distros and APT, what the hell is a Flatpak? Imagine coming from Windows or macOS and your first experience of Linux is the store bring broken. It was frustrating for me because even with 20 years of using and installing Linux, at least the base installation always comes with some functioning browser even if the repos are out of sync or dead.

there is chrome now

I think modern graphics cards are programmable enough that getting the gamma correction right is on the devs now. Which is why its commonly wrong (not in video games and engines, they mostly know what they’re doing). Windows image viewer, imageglass, firefox, and even blender do the color blending in images without gamma correction (For its actual rendering, Blender does things properly in the XYZ color space, its just the image sampling that’s different, and only in Cycles). It’s basically the standard, even though it leads to these weird zooming effects on pixel-perfect images as well as color darkening and weird hue shifts, while being imperceptibly different in all other cases.

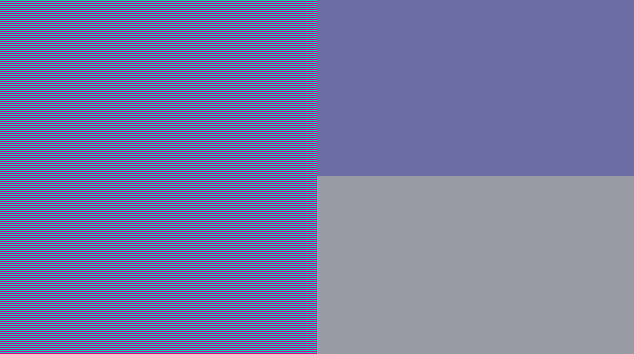

If you want to test a program yourself, use this image:

Try zooming in and out. Even if the image is scaled, the left side should look the same as the bottom of the right side, not the top. It should also look roughly like the same color regardless of its scale (excluding some moire patterns).